现实的世界是一个拥有宽度、高度和深度的三维立体世界。在平面二维显示技术已经成熟的今天,三维立体显示技术首当其冲的成为了当今显示技术领域的研究热点。

本作品搭建了基于stm32f4的三维旋转显示平台,它的显示原理属于三维显示中的体三维显示一类。它是通过适当方式来激励位于透明显示体内的物质,利用可见辐射光的产生三维体像素。当体积内许多方位的物质都被激励后,便能形成由许多分散的体像素在三维空间内构成三维图像。

体三维显示又称为真三维显示,因为他所呈现的图像在真实的三维空间中,展示一个最接近真实物体的立体画面,可同时允许多人,多角度裸眼观看场景,无序任何辅助眼镜。

本作品的特点在于,利用stm32f4的浮点运算能力,实现了低成本的体三维显示数据的生产,并利用类似分布式处理的系统结构,满足了体三维显示所需要的巨大数据吞吐量,等效吞吐量可达约300Mb/s

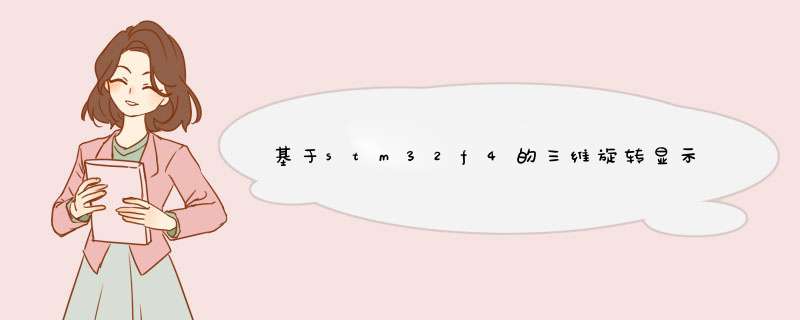

1.系统方案众所周知人眼在接收被观察物体的信息时,携带物体信息的光信号通过人眼细胞及神经传入大脑神经,光的作用时间只是一个很短暂的时间段,当光的作用时间结束后,视觉影像并不会立即消失,这种残留的视觉称“后像”,视觉的这一现象则被称为“视觉暂留”( duraTIon of vision)物体在快速运动时, 当人眼所看到的影像消失后,人眼仍能继续保留其影像0.1---0.4秒左右的图像。在空间立体物体离散化的基础上,再利用人眼视觉暂留效应,基于LED阵列的“三维体扫描”立体显示系统便实现了立体显示效果。如图1所示,以显示一个空心的边长为单位1的正方体为例。LED显示阵列组成的二维显示屏即为正方体每个离散平面的显示载体,LED显示屏上的被点亮的LED即为正方体的平面离散像素。我们将该LED显示平面置于轴对称的角度机械扫描架构内,在严格机电同步的立体柱空间内进行各离散像素的寻址、赋值和激励,由于机械扫描速度足够快,便在人眼视觉上形成一个完整的正方体图像。图1(a)所示为立方体0度平面二维切片图,图1(b)所示为立方体45度平面二维切片图,图1(c)所示为立方体135度平面二维切片图,图1(d)所示为立方体180度平面二维切片图,图1(e)所示为观看者观察到的完整立方体显示效果。

图1

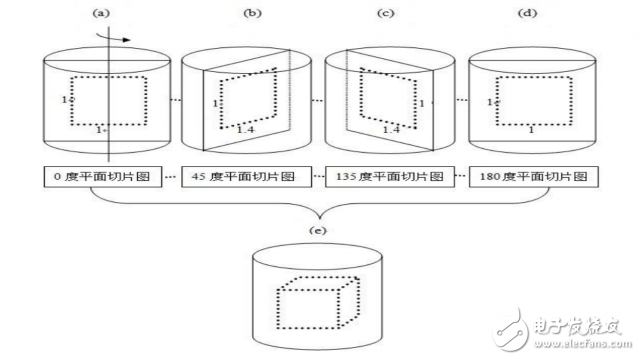

系统方案如图2所示,整个系统由四个模块组成,其中数据获取单元主要由在PC上的上位机完成,利用3D-Max,OpenCV,OpenGL,将三维建模数据转化成三维矢量表述文件,传给由STM32F4 Discovery开发板构成的控制单元,利用其上的角度传感器,结合wifi模块或以太网模块通过电力线模式传给LED旋转屏单元,其中的STM32F4负责将ASE文件解析成LED显示阵列所需的点云数据流,通过串行总线传输给由FPGA驱动的LED显示阵列,通过LED刷新速率与机械单元旋转速率相匹配,从而实现体三维显示的效果。

图2

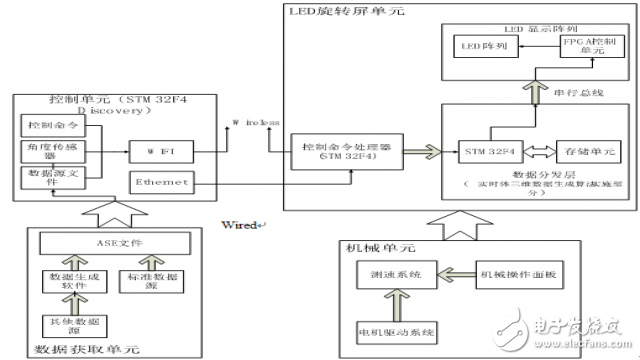

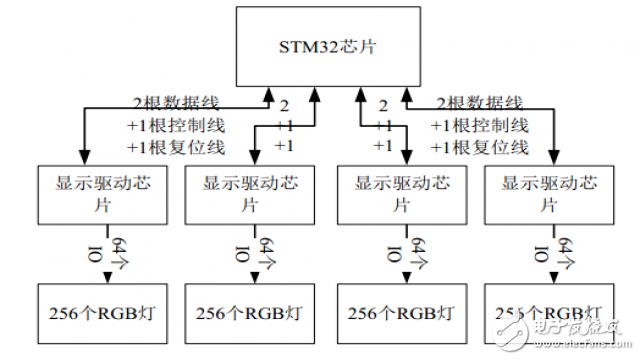

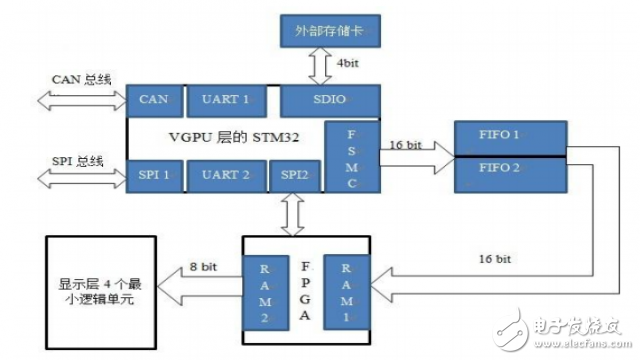

2.系统硬件设计系统的机械部分如图3所示,显示面板的硬件结构如图4,图5所示。本系统的底部是直流电机和碳刷,直流电机主要负责带动上层的显示屏幕高速旋转,而碳刷则负责传递能量和通信信号。在显示屏幕的正面是由96*128构成的三色LED点阵,FPGA的PWM信号通过驱动芯片控制三色LED从而实现真彩显示。在屏幕背面由多块STM32F4,SD卡,FIFO构成,主要负责解析由控制单元传过来的ASE文件,并实时生成体三维显示数据,并传给LED灯板的驱动FPGA,并通过其实现最终的图像显示。

图3

图4

图5

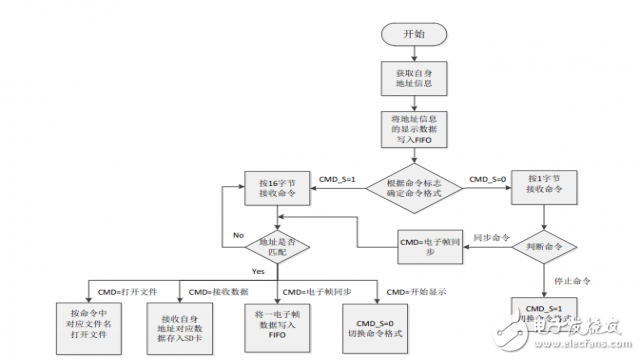

3.系统软件设计3.1软件控制流程:

3.2关于实时生成体三维显示数据的讨论:

一个瓦片64*32

LED层FPGA*8:每个16*16LED

中间层stm32*2:每个4LED层的FPGA,也即32*32

由于经过压缩,一个led数据为4bits

所以一个stm32每一帧所要生成的数据为32*32*0.5bytes = 512bytes

转速800转,一帧1/800s = 1.25ms = 1250000ns

stm32f4主频168Mhz,指令周期 = 5.93ns

约可执行20万多条指令

假设fsmc总线的速度为50Mhz,则每帧写入的时间大概在0.02ms内

程序总体思路

事先算出所有电子帧上非零的点,以及连续0的个数,在每一个电子帧同步后,算出生成下一帧的数据,写入fifo

输入:线段端点的集合

//input: endpoints of segments which formed the outline of a 3D model

//x posiTIon with range 0-95

//y posiTIon with range 0-95

//z posiTIon with range 0-128

/******************************************/

//from later discussion, one of the Q format

//type should replace the char type

/******************************************/

struct Coordinate_3D

{

_iq xPosition;

_iq yPosition;

_iq zPosition;

};

//after you get the intersection points in 3d coordinate, you need to remap it into 2d coordinate on the very electrical plane,

//and the conversion is quite simple Coordinate_2D.yPosition = Coordinate_3D.zPosition; Coordinate_2D.xPosition = sqrt(xPosition^2+yPosition^2)

struct Coordinate_2D

{

char xPosition;

char yPosition;

};

struct Line

{

struct Coordinate_3D beginPoint;

struct Coordinate_3D endPoint;

unsigned char color;

};

//frame structure to store the visible points in one electrical frame

//need to be discussed

//here's the prototype of the Frame structure, and basically the frame struture should contain the visible points,

//and the zero points. As we have enclosed the number of zero points after each visible points in their own data structure,

//only the number of zero points at the beginning of the whole frame should be enclosed in the frame struture

struct Frame

{

int zerosBefore;

PointQueue_t visiblePointQueue;

};

//we need a union structure like color plane with bit fields to store the color imformation of every four FPGAs in one data segment

//actually, it's a kind of frustrateing thing that we had to rebind the data into such an odd form.

union ColorPalette

{

struct

{

unsigned char color1 : 4;

unsigned char color2 : 4;

unsigned char color3 : 4;

unsigned char color3 : 4;

}distributedColor;

unsigned short unionColor;

};

//and now we need a complete point structure to sotre all the imformation above

//here we add a weight field = yPosition*96 + xPosition, which will facilitate

//our sort and calculation of the zero points number between each visible point

//it's important to understand that, 4 corresponding points on the LED panel

//will share one visiblepoint data structure.(一块stm32负责4块16*16的LED,每块对应的点的4位颜色信息,拼成16位的数据段)

struct VisiblePoint

{

struct Coordinate_2D coord;

union Colorplane ColorPalette;

int weight;

int zerosAfter;

};

//as now you can see, we need some thing to store the visible points array

typedef struct QueueNode

{

struct VisiblePoint pointData;

struct QueueNode * nextNode;

}QueueNode_t, *QueueNode_ptr;

typedef struct

{

QueueNode_ptr front;

QueueNode_ptr rear;

}PointQueue_t;

//finally, we will have 16*16 words(16 bits)to write into the fifo after each electrial frame sync cmd.

//it may hard for us to decide the frame structure now, let's see how will the work flow of the algorithm be.

//firstly, the overall function will be like this

void Real3DExt(struct Line inputLines[], int lineNumber, struct Frame outputFrames[])

//then we need some real implementation function to calculate the intersection points

//with 0 = no intersection points, 1 = only have one intersection points, 2 = the input line coincides the given electrical plane

//2 need to be treated as an exception

//the range of the degree is 0-359

//it's important to mention that each intersection point we calculate, we need to

//remap its coordinate from a 32*32 field to x,y = 0-15, as each stm32 only have a 32*32

//effective field(those intersection points out of this range belong to other stm32), which can be decided by its address

int InterCal(struct Line inputLine, struct VisiblePoint * outputPoint, int degree)

//so we will need something like this in the Real3DExt function:

for (int j = 0; j < 360; j++)

{

for(int i = 0; i < lineNumber; i++ )

InterCal(struct Line inputLine, struct VisiblePoint outputPoint, int degree);

......

}

/******************************************/

//simple float format version of InterCal

/******************************************/

//calculate formula

//Q = [-1,1,-1];

//P = [1,1,-1];

//V = Q - p = [-2,0,0];

//Theta = pi/6;

//Tmp0 = Q(1)*sin(Theta) - Q(2)*cos(Theta);

//Tmp1 = V(1)*sin(Theta) - V(2)*cos(Theta);

//Result = Q - (Tmp0/Tmp1)*V

float32_t f32_point0[3] = {-1.0f,1.0f,-1.0f};

float32_t f32_point1[3] = {1.0f,1.0f,-1.0f};

float32_t f32_directionVector[3], f32_normalVector[3], f32_theta,

f32_tmp0, f32_tmp1, f32_tmp2, f32_result[3];

arm_sub_f32(f32_point0,f32_point1,f32_directionVector,3);

f32_theta = PI/6.0f;

f32_normalVector[0] = arm_sin_f32(f32_theta);

f32_normalVector[1] = arm_cos_f32(f32_theta);

f32_normalVector[2] = 0.0f;

arm_dot_prod_f32(f32_point0, f32_normalVector, 3, &f32_tmp0);

arm_dot_prod_f32(f32_directionVector, f32_normalVector, 3, &f32_tmp1);

f32_tmp2 = f32_tmp0/f32_tmp1;

arm_scale_f32(f32_normalVector, f32_tmp2, f32_normalVector, 3);

arm_sub_f32(f32_point0, f32_normalVector, f32_result, 3);

//and than we need to decide whether to add a new visible point in the point queue, or to update

//the color field of a given point in the point queue(as 4 visible point share one data structure). from this point, you will find that, it may be

//sensible for you not to diretly insert a new point into the end of point queue but to insert it in order

//when you build the pointqueue. it seems more effective.

void EnPointQueue(PointQueue_t * inputQueue, QueueNode_t inputNode);

//finally we will get an sorted queue at the end of the inner for loop

//than we need to calculate the number of invisible points between these visible points

//and to store it in each frame structure. the main purpose to do so is to offer an quick generation

//of the blank point(color field = 16'b0) between each electrical frame

//the work flow will be like this:

loop

{

dma output of the blank points;

output of the visible points;

}

/******************************************/

//some points need more detailed discussion

/******************************************/

//1.memory allocation strategy

//a quite straight forward method will be establishing a big memnory pool in advance, but the drawback of this method

//is that it's hard for you to decide the size of the memory pool. Another way would be the C runtime library method,

// and you can use build-in function malloc to allocate the memory, but it will be a quite heavy load for the m3 cpu

// as you need dynamic memeory allocation throughout the algorithm.

//2.the choice of Q format of the IQMATH library

//from the discussion above, the range of the coordnate is about 1-100, but the range of sin&cos is only 0-1,so there's a large gap between them.

//may be we can choose iq24?? Simultaneously, another big problem will be the choice between IQMATH and arm dsp library as their q format is

//incompatible with each other. as far as my knowledge is concerned, we should choose IQMATH with m3 without fpu, and cmsis dsp library with m4 with fpu.

//more detail discussion about the range of the algorithm

//x,y range is -64 to 64

//the formula is

//Tmp0 = Q(1)*sin(Theta) - Q(2)*cos(Theta);

//Tmp0 range is -128 to 128

//Tmp1 = V(1)*sin(Theta) - V(2)*cos(Theta);

//Tmp1 range is -128 to 128

//Result = Q - (Tmp1/Tmp2)*V

//because the minimal precision of the coordinate is 1, so if the result of Tmp1/Tmp2 is bigger than 128, the Result will be

//saturated. With the same reson, if (Tmp1/Tmp2)*V >= 128 or <= -127, the result will be saturated

4.系统创新欢迎分享,转载请注明来源:内存溢出

微信扫一扫

微信扫一扫

支付宝扫一扫

支付宝扫一扫

评论列表(0条)